How much gold can I give out on this level without breaking the game economy? Is this boss too tough? And will the player ever find that hidden game item? In the past five years at Grey Alien Games, we’ve developed several automated testing algorithms to answer these kinds of questions.

In recent years, our studio has developed a series of increasingly complex solitaire games for PC, with our own game engine coded in BlitzMax. As a small indie studio, playtesting has primarily involved giving early builds of the game to family, friends, and fans, and watching players at shows or harvesting log files of their play sessions.

This has many advantages, such as seeing how individuals with different play styles approach the game, watching them grasp (or fail to understand) key concepts, and asking them to explain their reasoning as they play. As developers, we can change the pacing or when we introduce things. We can also spot bugs.

The disadvantages of this approach relate to fully testing the game systems themselves. As our games are solitaire-based, each level involves a pack of cards being ‘dealt’ onto the play field in a set layout, but with the cards in random order.

This is overlaid with puzzle elements that lock down various cards, and items that incrementally boost the player’s ability throughout the game. In our later games, the cards chosen by the player gradually power up weapons used in duels, and an AI enemy also plays solitaire. Just because the player got through a level successfully once is no guarantee that it would work the 30th or 1000th time those cards were dealt.

Furthermore, volunteer playtesters seldom play a game through to the end, and we wanted to be sure that our games were correctly balanced, with no bugs, right up to the final level. Automated testing gives us additional peace of mind that when we ship, we’re shipping the best game we can.

Thousands of results

Jake Birkett – game developer, Grey Alien founder, and also my husband – first addressed these issues when making Regency Solitaire. “I wrote an algorithm to play the solitaire level in what I felt was the most optimised way,” he says. At the press of a button, the algorithm plays through a level in milliseconds. “I can run that a hundred or a thousand times and get a whole bunch of results out.”

The kind of data we were looking for was:

– How difficult is it?

– How much gold does the player earn?

– What’s the biggest combo they can get?

(A combo is a long chain of card matches in solitaire; larger combos generate greater rewards.)

Let’s take a look at the types of variables we test for our puzzle levels. If we run the test algorithm 100 times, then we get the output you can see in Figure 1.

Figure 1: When we perform test runs, Jake can set initial starting conditions. This example is a raw level, and the player has no special abilities or other modifiers.

Ranking levels

‘Perfects’ shows how often the layout can be completely cleared. In this case, 95/100 could be cleared, so it’s a pretty easy layout. ‘Average cards left’ is another output that indicates the level of difficulty.

I will typically design a large number of levels and put them through this puzzle test algorithm, then create a spreadsheet to rank them in terms of difficulty. We then generally use this ranking to assign levels in increasing difficulty as the game progresses.

It’s worth noting that towards the end of the game, players will have collected more items or skills. “Normally, everything you get in the game makes the levels easier,” says Jake. “So, if this level is ‘95% easy’ without anything, then it’s going to be very easy once you’ve got all these extra special abilities.” So, in addition to this basic level difficulty ranking, we can also assign starting conditions for any stage of the game, and retest individual levels to see how challenging they will actually be for a player who has all the in-game items.

In the level editor, we can make a level more difficult by adding deeper stacks of cards or multiple locks or keys, or adding suit locks and burying keys slightly further down. Every time we bury a key one card further down, the level gets harder to beat.

Star players

Players are awarded stars depending on how many cards are left on the tableau at the end of a level. In our latest game, Ancient Enemy, we display what the cut-off points are for getting zero, one, two, or three stars on screen for each level, and we set these individually for each duel level based on the difficulty discovered with our testing algorithm. Hence our readout for ‘average stars’.

In Ancient Enemy, player health persists across levels and within chapters. So, using automated testing to track attrition over several fights and check the game is winnable is important.

‘Average combo’ is another useful value. If it’s very high, it probably means there are too many face-up cards at some point: there’s an optimum number of face-up cards for a solitaire game. (Another example of this type of rule is in a match-three puzzler like Bejeweled, where three or four gem types are too easy to match, and six or seven gem types make it too hard. So, the optimum is five.)

While generating a high combo might be fun once in a while, we don’t want every level to be like that. It destroys the novelty and throws out balancing, for example, by generating too much cash and skewing the game economy.

In the beginning, we want to teach players what a combo is and allow them to have levels where possible, but we also want to keep the number of average combos under control. Conversely, if it’s too low, then it means that the level is too difficult. With insufficient face-up cards, the player will end up just flipping the stock looking for a new card, which isn’t fun.

The ‘playable card’ values use a tracker that looks at the whole layout throughout a level to see how many playable face-up cards there are. This gives us a heads-up about levels with bottlenecks that mean they’re too hard, or contain possible over-large combos. Whether or not story items are found is another piece of useful information, especially as some of these need to be found easily on tutorial levels.

Algorithm tips and limitations

According to Jake, “the algorithm plays the cards in what I feel is the most optimised way to play a level – a feeling based on many years of making solitaire games and testing them. But I could still be wrong.” Unlike a real player, the algorithm doesn’t make mistakes – so it doesn’t accidentally miss a card.

But there are sometimes extra things an intelligent human player can do that the algorithm can’t. “By looking at the cards,” says Jake, “you may be able to plan ahead for a really cool combo by playing them in a particular order – the algorithm just sees what’s available now and tries to make the best choice.”

The automated tester sometimes helped us to spot issues in our code, allowing us to fix it. But sometimes there can be a bug in the test code. So, if your test code is not giving you the values or outputs you want, you may need to investigate whether the game or the test code itself is the cause.

Before running a test, it’s a good idea to estimate the type of output you expect to see. If the output is not as expected, either there’s a problem with the code or your assumptions are wrong – the item is less powerful, or there are other conditions affecting it.

“Your test case scenarios are really important as well – if they are wrong you won’t get results that are helpful,” Jake says. “For example, I’ve just been testing something that gives the player more damage when they get below a certain health, and it was really having a low effect. And I realised on these particular fights, the player’s health never got that low, because the fights were too easy.”

He had to make a harder fight, so the player experienced lower health before the item kicked in.

Balancing an economy

Let’s look at balancing the game economy by tracking the amount of gold a player will have accumulated across several levels. The test algorithm outputs a CSV file so that we can view the values from 100 runs of a single level (Figure 2). In a spreadsheet, we then select one of these – for example, gold – and then use the ‘sort data’ function to show the range.

Figure 2: Once output to a spreadsheet, we can sort values to see the range of a level – in this case, gold.

To balance the economy, we can use the averages – min and max for every level – to work out the cumulative gold, and set in-game item prices accordingly (see Figure 3).

Figure 3: We can use our data to set in-game prices and balance its economy.

In Figure 4, you can see a similar dataset from 100 test runs on player health. In our solitaire-driven duel games, Shadowhand and Ancient Enemy, we use these to set enemy difficulty, health potion, item drops, etc.

Figure 4: A dataset from 100 test runs on player health.

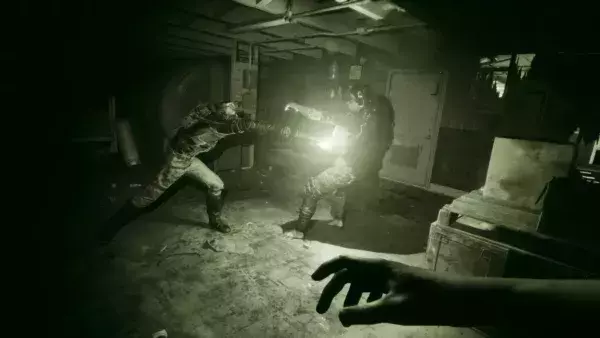

Beyond puzzle levels

After creating his first automated test for Regency Solitaire, Jake went on to make a new one for duels in subsequent games, Shadowhand and Ancient Enemy. In addition to outputting values for level design and game balancing, it also checks that if you run the battle many, many times, nothing crashes.

This test uses all the game systems: all the attacks, particle effects, sound effects, and graphics. It runs a bit slower with debug mode (optionally) on, but can be a useful decision-making tool.

We ask a slightly different set of questions: “How difficult is the fight, do I need to adjust the enemy health or the enemy intelligence, or use various other levers to adjust the balancing?”

So what’s new in the duel version? “I pitted the enemy AI against the player AI, and they play the actual game using the engine, and it tests every part of it, so it’s a lot slower to run than the algorithm that whizzes through the puzzles,” says Jake. “I have done things like switch off visual output and speed up every animation – it runs it fast, but it’s still calling the same code.”

Where to start

We’ve found that it’s best to make an automated testing system that uses our existing code and systems. Our experience is in level-based games – other types of games may need a different approach.

At first, you can aim for a very simple result – has the player won or lost? – or some other variable like a score or gold. As long as you can run it once, then you can set it to run multiple times and output those values, it can be useful.

A final word of warning: it’s possible to get too involved in looking at edge cases and testing every aspect of your game. That may not help move your project forward! Even so, we hope this has sparked some ideas about approaches to testing your game.